A year since the release of ChatGPT, this post is a reflection on some approaches and developments from the perspective of an international school leader.

When ChatGPT was released, we were in (yet another, but the final, though we didn’t know it at the time) round of covid restrictions and remote learning. Everyone was sick, and probably the last thing we needed in the moment was something as significant as the proliferation of GenAI across education. What was this thing? What does it mean for us? How will we respond?

Before ChatGPT, a few of us had been looking at AI, starting with Kai-Fu Lee & Chen Qiufan’s 2021 book AI2041, a mostly optimistic vision of life in 2041. It struck a chord, as the characters in the stories of 2041 are the children in our schools today. I got into some reading, some exploration and experimentation (including early GPT-2 writing and basic image generation), and consideration of broader AI topics, but it was a niche interest and not something that drew a lot of interest or attention.

Amidst the chaos of sickness, remote learning and the eventual, super high-energy celebrations, events, projects and the return to “normal” school, we took the time to gather resources, experiment, discuss and plan. It was clear across the community, and globally, that GenAI was seen as both threat and opportunity; a source of unpredictability that would re-catalyse conversations and actions around futures of learning. It was immediately apparent that there were significant ethical issues with GenAI, and a volatile edtech marketplace was forming around AI tools.

A year on, developments in GenAI have moved beyond my predictions in 2022. From the power of LLM’s, image generators, video translation and edtech tools, to the significance of issues in bias, privacy, data-security and integrity, GenAI has dominated conversations not just in EdTech, but in broader educational discussions. Massive companies have dominated the news, books are on sale, and agencies, schools and universities are getting to grips with it.

Also a year on, we are seeing more people at a point of readiness to engage in conversations and innovations with GenAI. 2022 was too chaotic. Early 2023 was too soon, we had to focus on community. Summer 2023 was the first time most of us could finally go home. Early autumn 2023 was the first ‘normal’ start since 2019, and this has been an intense first term.

In parallel with all this, I’m working on an EdD with the University of Bath, focusing the research for my own assignments on GenAI in international education.

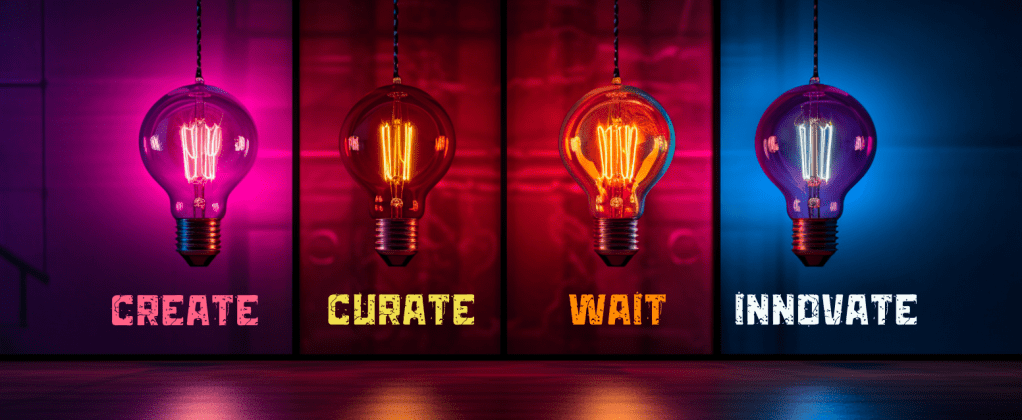

In the background, those of us with the capacity and interest kept the research and development going. On reflection, here’s how it looked: Create, Curate, Wait, Innovate.

Create

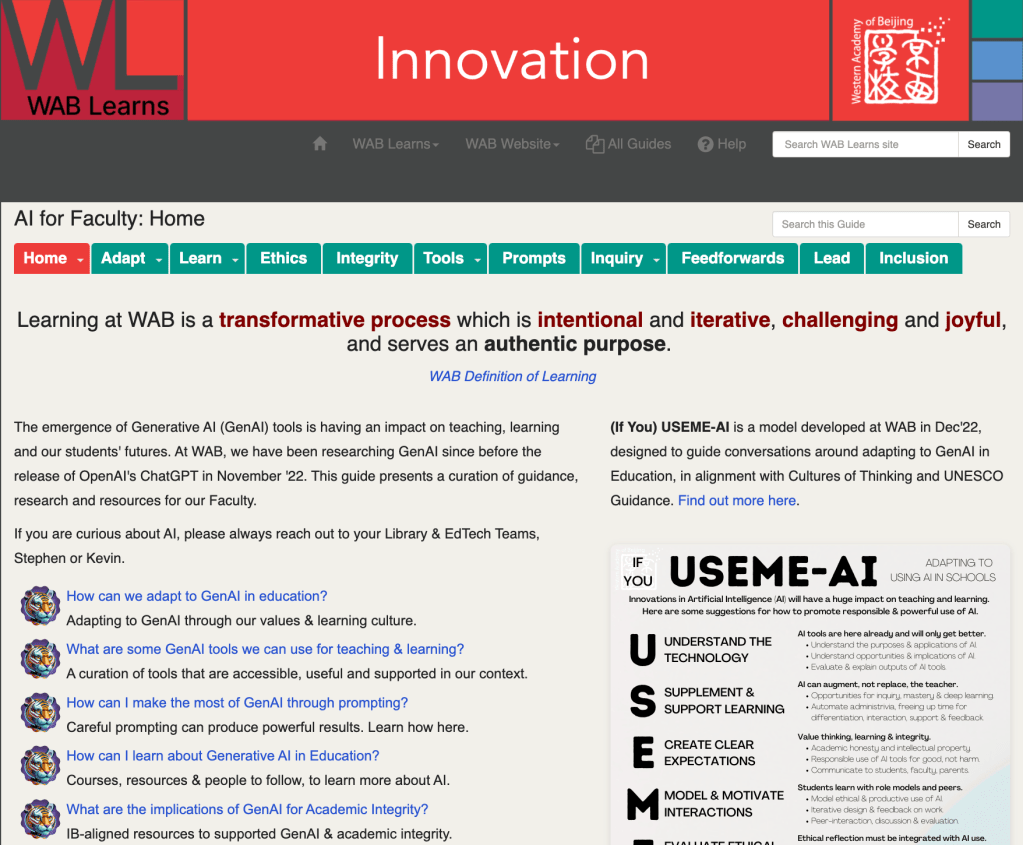

As GenAI tools and discussions developed, play was essential. What could they do? Could it be useful? Were they appropriate? I kept a mega-thread of experiments from Oct’22-Jul’23 (scroll back) and we connected with others online in sharing our learning. Noticing that culture would be more important in a school’s adaptations to GenAI than policy or tech tools, and seeing reactions ranging from ‘ban it’ to potentially unsafe uses, I created (If You) USEME-AI in Dec’22, as a frame for thinking through issues and adaptations. Our team created concise introductions for colleagues and we held some interesting sessions with parents to consider our approaches.

As some tools started to emerge as potentially useful, we created guides and support materials, to be used in an invitational way. Some early discussions and workshops with students emerged areas of interest, and our fab library-tech teams started to support reasonable use of GenAI in research.

Curate

The internet got noisy. Really noisy. Facebook groups, blog posts, Twitter threadbois and marketing fluff all capitalised on new niches. Many were sharing great ideas, but to people coming new to the conversation, it could be really overwhelming. We started curating resources that could be of use to colleagues. I repurposed an pre-ChatGPT AI guide to collate research and issues, but even that became too much information, and so I rebuilt it in Oct’23 to make wayfinding easier for Faculty, Students and Parents.

Adaptation to GenAI is multilayered and complex: utility, applicability, safety & ethics need to be simultaneously considered, and this too can feel overwhelming. How do we support people’s journeys to thriving in a rapidly-changing space, when the future is uncertain and even their identities as educators are provoked? How do we keep ethical considerations in mind, whilst acknowledging that our learners need to be able to adapt to an AI world? All whilst experiencing the long tail of the pandemic.

It is still an ongoing source of work to keep it relevant. It has been useful over the last year, and is becoming more useful now because…

Wait

My belief in international education is that those who can do something, should – and we should share along the way in case it is of use to others. Building a current knowledge base with depth of understanding and curating as much as possible helps us to be ready. We can collaborate with those within and beyond our organisations, so that when those around use are ready to engage, we are ready to support.

Ethan Mollick, Wharton professor, researcher and innovator, has been a really useful source of information and experiments in AI. His work put me onto the “wait equation” (explanation here), coined by Robert L. Forward. In essence, timing is critical in innovation, and sometimes it is better to wait for new technologies (or resources) to achieve a goal than to jump onto the first thing. By giving GenAI a year to develop, and the edtech ecosystem time to settle, we can make decisions based on more powerful tools and more considered approaches.

Some ‘what-if’ examples of the wait equation at play in our context:

- What if we had jumped on (and paid for) early platforms in 2022/23 that ended up collapsing or just weren’t ready? No-one needed the distraction of mediocre tools so early, nor did we have the bandwidth to support them.

- What if we had pushed whole-school approaches before the culture was ready? When many people were really uncomfortable, or recovering from the pandemic, forcing this on teachers could have burned trust and good faith.

- What if we had gone blindly into approaches that opened doors to ethical risks? For example, adopting flawed, biased and potentially harmful “AI detectors” whole-sale?

This first year of GenAI in education has resulted in loads of research and development. It took until September 2023 for UNESCO to finalise their Guidance, based on years of considered work, and now we have reliable resources in hand for supporting conversations and approaches.

It might feel counter-intuitive, but sometimes we need to innovate in the background while we wait for the moment. This rapid and accelerating (mine)field of education requires broad and inclusive perspectives, and over the last year, voices and resources of real value have emerged to help us navigate our way to the future.

Innovation suggests “fast, faster, fastest”, but in reality, patience pays off.

Now that we’re a year in, more and more colleagues are emerging as ready to engage meaningfully. Some might have had threatened reactions to GenAI last year, but are starting to seek support and guidance. Some might have given it a go early on and been disappointed by the outcomes. Others might have needed more time to recover from the pandemic’s impacts on school and life. Many have been engaging in their own reading, research and experimentation and are ready to put their ideas to practice, and some have just heard the right message at the right time from the right people to get unstuck.

Innovate

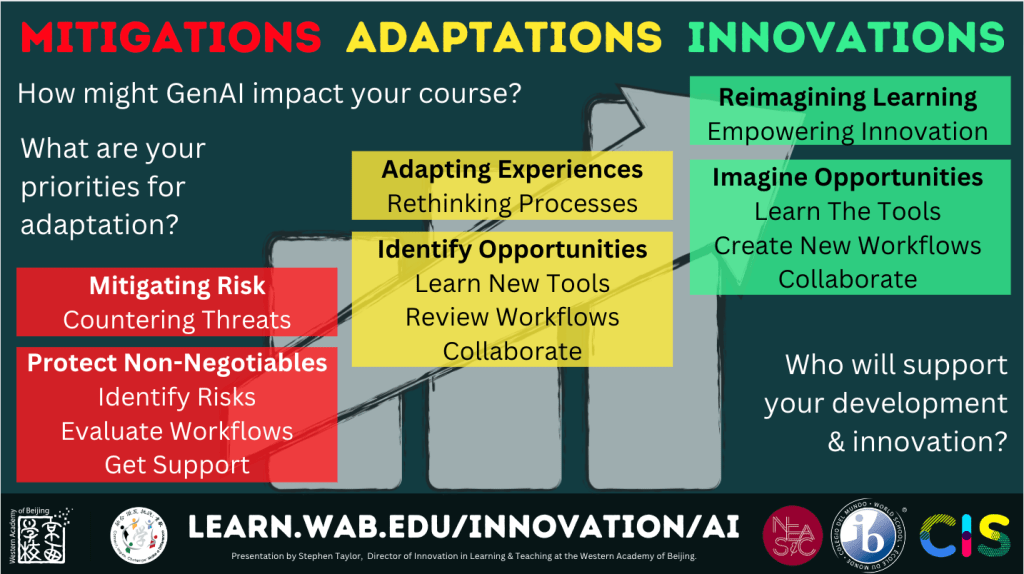

Innovation is a human endeavour, and innovators need to look beyond the technology and into the community. From work within and beyond our context I’ve been observing people in basically three states of being: mitigation, adaptation and innovation. Some people are in multiple states at the same time, dependent on their micro-context, and we need to be attuned to their needs in the moment.

Mitigations

Some educators are experiencing constraints beyond their control, yet feeling the impacts of GenAI. They all want the best for their learners but situations such as not-yet-evolved terminal assessment rules might be threatened by GenAI capabilities. They need support in navigating these tensions safely.

Adaptations

Those with more freedom or fewer constraints are in adaptation mode. They are rethinking processes to leverage GenAI, to maximise learning (and efficiencies), so they can ensure better learning experiences for their students. They might be using tools for inclusion, or supporting multilingual learners, or they could be looking for ways to safely introduce appropriate GenAI tools into learning experiences and research for students. They and their students need collaborators and partners in the work, as they iterate and share.

Innovations

A smaller group have the bandwidth to really push the boundaries of what is now possible in teaching and learning. They are rethinking their approaches entirely, and are willing to invest their energy in experiments that might be amazing, or might not work out (yet). They and their students need collaboration, permission and support in leading the pack and learning from their successes and missteps. They might most benefit from connection with innovators from other schools and contexts.

What have been some key takeaways from this first year of genai in education?

This has been an incredible year for professional learning, growth and development in international schools and in education in general. Some lingering thoughts and reflections, from our context:

- School culture will always beat technology and adversity, and that is where we need to focus our efforts.

- Innovation is a patience game, not a race to be first.

- A school’s approaches must be true to their beliefs and guiding statements. Strong vision and pedagogical approaches, notwithstanding tech, are our ‘north stars’.

- Our learners are not rushing to cheat, they want ownership of their own work. They are a great source of honesty and inspiration. Post here.

- Parents worry more about the implications of AI on their children’s futures than they do about ‘AI cheating’.

- All of education is in this together and whatever comes next, approaches must ensure ethics, integrity, inclusion and equity.

- Teacher-librarians, edtech integrators, early adopters and critical friends are our most powerful assets and resources.

- Finding signals in the noise is hard, never-ending work in the field of AIEd. Read, learn, connect, discuss beyond the boundaries of the school and even education to get a sense of what is emerging.

- Focus on demonstrations of utility, not novelty. It’s fun to play with the cool new toys, but not everyone has the time for that – and sometimes superficial demonstrations can set people backwards.

Another big investment in time and energy over the last couple of years has been running our IB-NEASC Collaborative Learning Protocol and CIS Deep Dive reaccreditation pathways. Something of interest in these has been NEASC’s 4C’s model for innovation/change, and so here’s my interpretation of that in light of adapting to GenAI in schools:

What about some lingering questions?

There is still so much to explore and resolve in this field. I know our global community will come together and share, share, share. Here are a few questions on my mind:

- What will be the lived experiences of the next few rounds of our graduates as they leave our bubble and land in the highly variable approaches to GenAI of their destination universities or workplaces?

- Which pathways to personal and community success will open up for our learners over the next 5-10 years?

- After the initial wave of adaptation/mitigation-driven responses from our agencies and educational organisations, who will emerge with the most innovative and practical approaches?

- How do we ensure equitable, ethical and safe access to GenAI innovation to all learners, not just the most privileged?

- What will be the broader economic implications of all this, and how might it affect international schooling?

- What are the questions we don’t yet know to ask?

And what’s next?

Well… who can really know? But some things we’re working on…

- Continued innovation, adaptation, curation and creation.

- Continued interaction with and support for our learners.

- Continued connection with and contribution to the wider world of education, AI and innovation.

- Our school’s ALT Team (Advancing Learning with Technology), led by our Head of EdTech & IT, has recruited a diverse range of faculty to explore and innovate.

- Development and refinement of guidelines and professional development for the community.

- Connection with parent and industry experts locally for their insights and potential opportunities for our learners.

- Continuing my EdD studies and research on GenAI in international education, currently focusing on UNESCO’s guidance as part of a Policy unit.

- Continuing developing our Profile of WAB Alumni as a set of domains and competencies for our learners, aligning with external agencies, internal guiding statements, innovation in learning, our strategic direction and the Mastery Transcript Consortium’s Mastery Learning Record.

So here we are

One year on, very ready for a break, and wondering what 2024 will bring. Will it be the year of AGI? What will this reflection look like another year from now?

I’ll close this post with some of the people that have inspired me in 2023:

- Prof. Maha Bali writes about AI in Education and Critical AI Literacies.

- Dr. Sarah Elaine Eaton is an expert in Academic Integrity, and writes about the age of Postplagiarism as related to AIEd.

- Prof. Ethan Mollick shares a lot of AI explorations and ideas, and writes on One Useful Thing.

- Leon Furze has excellent resources about teaching ethics in the age of AI.

- Dalton Flanagan has curated a powerful set of AI tools for Educators, and has been working with AI since before ChatGPT.

- Joe Dale has great resources for AI in language classrooms.

- Tricia Friedman has some great ideas about AI in terms of equity and inclusion.

- Jungwon Byun is one of the leads at Elicit.org, and writes about AI in academic research.

- Dr. Torrey Trust has great resources on the limitations of AI detection tools.

- Tom Barrett provides lots of useful resources for AI in schools.

- Sara Candela & Brian Lamb at The Optimalist write about attention in the age of AI.

- The University of Kent’s Digitally Enhanced Education webinars feature loads of great presenters from around universities.

And some of the tools I use a lot:

- Perplexity has almost completely replaced Google search for me. Here’s a guide.

- I have ChatGPT Plus and use it sometimes for Advanced Data Analysis (example here) and Vision (example here).

- Now we have BingChat connected to our work accounts, I am using it more than ChatGPT.

- For image generation: I pay for Midjourney, and also use Bing Image Generator, Adobe Firefly and Ideogram.

- For video translation and avatars: HeyGen (here’s an example).

- For academic research: Elicit, SciSpace & Litmaps (post here).

- And Canva’s Magic Studio for editing images and presentations.

- HuggingFace for testing out new Spaces.

Thank-you for your comments.